Headlines

April 25, 2024

AZ Family

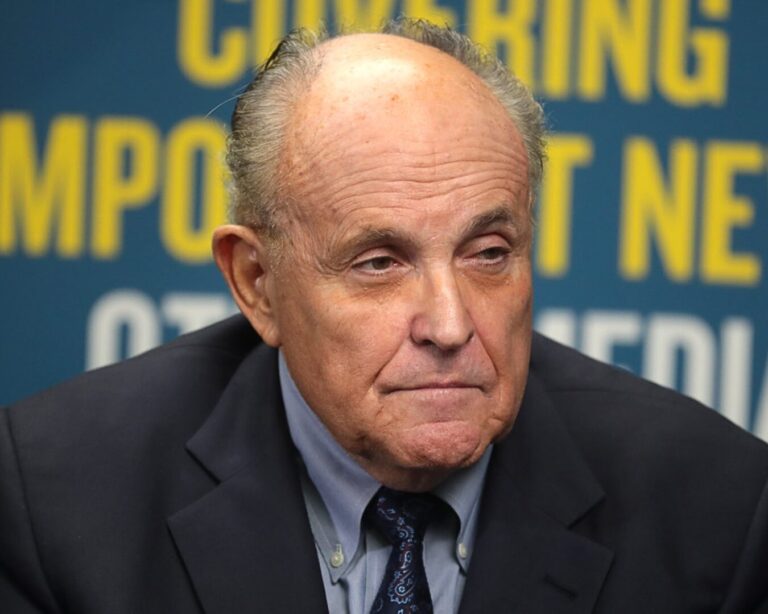

Giuliani and Others Indicted in AZ

Arizona Attorney General Kris Mayes indicted 11 "fake electors" and other former Trump officials, including Mark Meadows and Rudy Giuliani, for allegedly trying to overturn the 2020 Election.

SCOTUSblog

SCOTUS To Hear Trump Immunity Defense

The Supreme Court will hear former Trump's bid for criminal immunity, addressing if he can be tried for conspiring to overturn the 2020 election results, affecting trials in D.C., Florida, and Georgia.

Associated Press

Hamas Official on Terms for Disarming

A top Hamas official stated that the group would disarm and become a political party if a two-state solution establishing an independent Palestinian state along pre-1967 borders is implemented.

NBC News

Ukraine Uses Long-Range Missiles

Ukraine has used U.S.-supplied long-range ballistic missiles for the first time to target Russian forces in Crimea and eastern Ukraine, with the deployment kept secret for operational security.

The Arizona Republic

AZ House Repeals 1864 Abortion Law

The GOP-controlled Arizona House narrowly passed a bill to repeal an 1864 near-total abortion ban, with three Republicans joining all Democrats in support. The measure now moves to the Senate.

Idaho Capital Sun

Court Divided on Idaho Abortion Law

Supreme Court justices are divided over whether the Emergency Medical Treatment and Labor Act protects Idaho doctors from prosecution for terminating pregnancies during health emergencies.

The Jerusalem Post

Hamas Releases Hostage Video

Parents of Hersh Goldberg-Polin, a hostage shown in a recent Hamas video, urged him to "stay strong, survive" and called for urgent negotiations to secure the release of all hostages.

The New York Times

Trump Can Win by Losing Immunity

In the SCOTUS immunity case, Trump could benefit from a loss if it leads to procedural delays or added legal complexities, potentially postponing the trial past the election and affecting its timing.

BBC

TikTok To Fight "Unconstitutional" Ban

TikTok plans to challenge in court a new U.S. law it deems unconstitutional, which mandates its Chinese owner, ByteDance, to divest the app, expressing confidence in defending user rights.

The Washington Post

Why Sotomayor Debate Is Not Sexist

The debate over whether Sotomayor should retire reflects concerns about a potential conservative shift endangering liberal jurisprudence and broader anxieties about the stability of democracy.

Austin American-Statesman

Campus Protests Spread to UT-Austin

UT-Austin students held a pro-Palestinian protest, resulting in at least 20 arrests after police ordered the peaceful rally to disperse, substantially escalating tensions on the campus.

The Wall Street Journal

Don't Believe Crime Is Going Down

Despite media claims of declining crime, the decrease is due to underreporting, not an actual reduction, with official statistics and victim surveys indicating more unreported incidents.

Mediaite

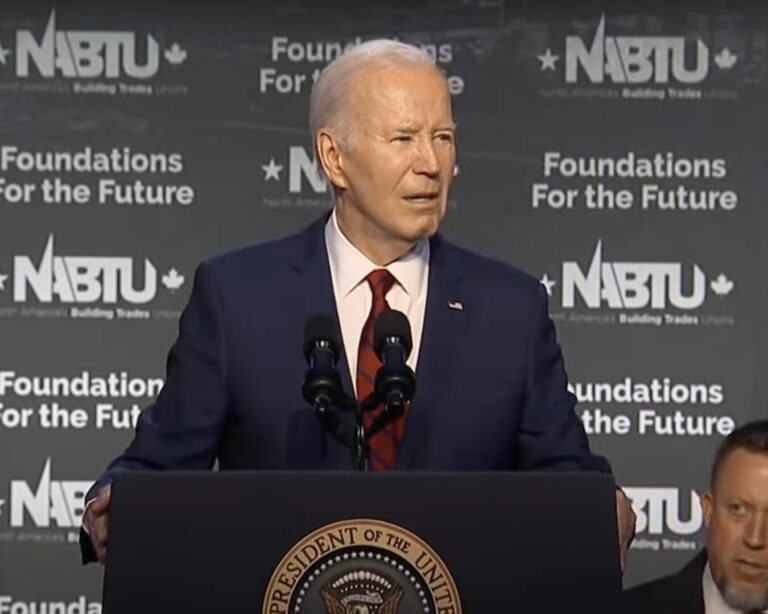

"Great Odin's Raven"

A video of President Biden accidentally reading teleprompter instructions, saying "Four more years. Pause." during a speech, went viral but was met with supportive chants from the audience.

CBS News

What To Know about Noncompete Ban

The new FTC rule bans nearly all noncompete agreements, freeing 30M workers from restrictive employment contracts, but faces potential legal challenges that could reach the Supreme Court.

Sky News

Military Horses Run Loose in London

Military horses from the Household Cavalry caused chaos in London after being spooked by construction noise, resulting in injuries and damage as several horses bolted through the streets.

CNBC

Truth Social Makes Trump Another Billion

DJT stock dropped 8% as Trump qualified for 36 million bonus shares, increasing his stake in the company to an estimated $3.7 billion after the stock closed above the $17.50 threshold.

Silver Bulletin

Silver: Go to a State School

Nate Silver advises choosing state schools over Ivy League universities due to declining public perception and increasing polarization of elite private colleges, which he expects to worsen.

New York Post

Biden Campaign Will Remain on TikTok

Despite President Biden signing a bill that could lead to TikTok's ban, his campaign will continue using the app to engage young voters, employing enhanced security measures.

Axios

Trump's Warning in Suburbia

Trump's vulnerabilities with suburban voters from 2020 are evident in 2024, shown by protest votes in suburbs around Philadelphia, Milwaukee, and Atlanta after Nikki Haley's campaign suspension.

For the Left

For the Right

Independent doesn’t mean indecisive.

© 2024 Smerconish. All rights reserved.

© 2024 Smerconish. All rights reserved.

News Links

Contact Links

Balance Delivered Daily

Get the Smerconish.com daily newsletter.

We will NEVER SELL YOUR DATA. By submitting this form, you are consenting to receive marketing emails from: Smerconish.com. You can revoke your consent to receive emails at any time by using the SafeUnsubscribe® link, found at the bottom of every email. Emails are serviced by Aweber

Privacy Policy | Website design by Creative MMS

Privacy Policy | Website design by Creative MMS