Headlines

July 15, 2025

SCOTUSblog

SCOTUS Enables ED Reduction

The Supreme Court allowed the Trump administration to proceed with a sweeping reduction of the Department of Education's workforce, pausing a lower court's order to reinstate nearly 1,400 fired employees, drawing sharp dissent from Justice Sotomayor.

NBC News

Texas Officials Defend Flood Response

Texas officials defended their response to the floods that killed at least 132 people, saying they did everything possible under rapidly deteriorating conditions, but acknowledged that better communication tools could help save more lives in the future.

Associated Press

Netanyahu Coalition Rattled

Prime Minister Benjamin Netanyahu’s coalition was shaken as the ultra-Orthodox United Torah Judaism party announced its exit over a contentious military draft law, threatening the government's stability amid high-stakes Gaza ceasefire talks.

The Washington Post

Trump Finds Conspiracy He Doesn't Like

Philip Bump argues that after years of fueling conspiracy theories to his political advantage, Donald Trump now finds himself the target of one, as his ties to Jeffrey Epstein place him on the wrong side of the anti-elite narrative he once mastered.

ABC News

Mars Rock To Be Auctioned

A 54-pound Martian meteorite — the largest piece of Mars ever discovered on Earth — is set to go up for auction at Sotheby’s in New York during Geek Week 2025, with an estimated value of a whopping $2 million to $4 million.

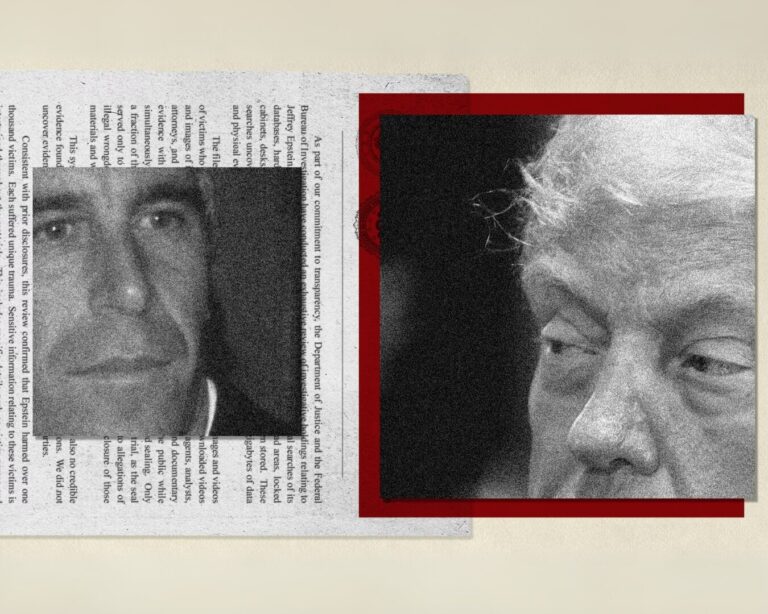

Mediaite

Did Epstein Have Dirt on Trump?

David Schoen, a lawyer who represented both Donald Trump and Jeffrey Epstein, revealed that he directly asked Epstein in his final days whether he had any damaging information on Trump, later stating on X that Epstein “had no information to hurt President Trump.”

ABC News

Idaho Book Reveals Murder Motive

A new book explores the chilling details and possible motive behind Bryan Kohberger’s brutal murders of four University of Idaho students, revealing that those closest to the victims believe he specifically targeted Madison Mogen after a perceived rejection.

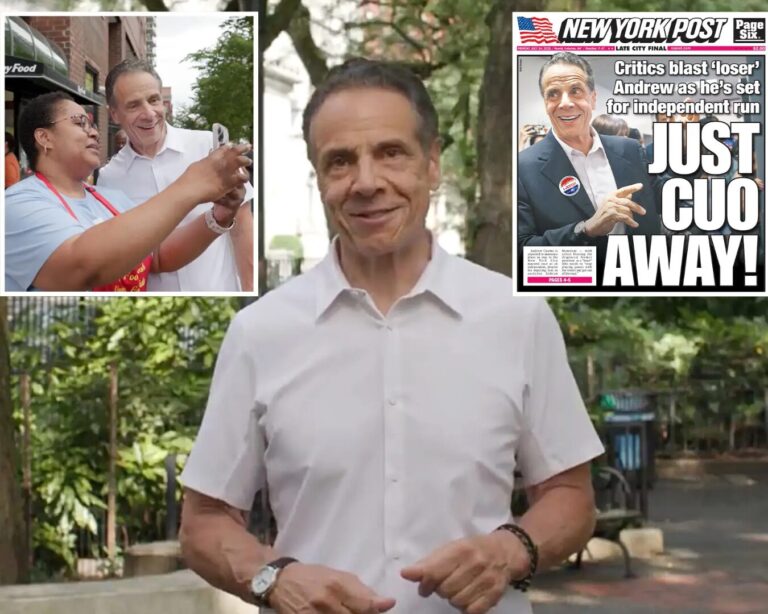

New York Post

Cuomo Confirms Independent Bid

Despite losing the Democratic primary, former Gov. Andrew Cuomo confirmed he will run an independent campaign for New York City mayor, vowing to drop out only if he’s not leading anti-socialist candidates in the November general election.

CNN

Obama's Message: "Toughen Up"

Former President Barack Obama urged Democrats to stop complaining and “toughen up” by actively standing up for their values, supporting candidates, and rebuilding momentum to challenge Trump’s influence and move the country forward.

NPR

The Power of Social Prescribing

Doctors around the world are increasingly “social prescribing” community activities like biking, dancing, and art—not just medicine—to improve physical and mental health by focusing on what matters most to patients.

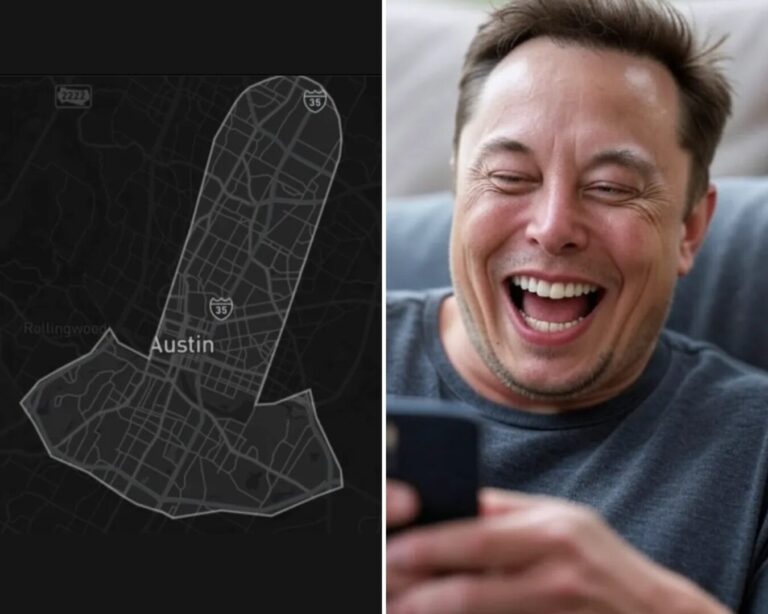

Electrek

Musk's Unusual Expansion Map

Tesla’s recent penis-shaped expansion of its Robotaxi service in Austin highlights not only CEO Elon Musk’s juvenile humor but also how far behind Tesla remains in serious autonomous ride-hailing compared to competitors like Waymo.

The New York Times

Billions Later: Waldorf Astoria Reopens

After nearly eight years and billions of dollars in renovations, the iconic Waldorf Astoria in New York reopens, blending restored historic grandeur with modern luxury to once again symbolize the city’s elegance and spirit.

The Atlantic

Why Trump Can’t Make Epstein Go Away

Donald Trump’s repeated reversals and shifting excuses about releasing Jeffrey Epstein’s client list have finally strained the limits of his supporters’ credulity, revealing that even his influence over their beliefs is not unlimited.

Quartz

Kids Turn To AI for Friendship

A new UK report, “Me, Myself, & AI”, reveals that many children are turning to AI chatbots not just for homework help but also for emotional support and friendship, sometimes because they have no one else to talk to.

Variety

First Look: New Harry Potter

HBO’s highly anticipated “Harry Potter” TV series has begun filming in the UK, featuring Dominic McLaughlin as the titular young wizard and setting a 2027 release date for its adaptation of the beloved books.

The Hill

Democrats Say Biden Needs To Refocus

Democrats are criticizing former President Biden for playing into Republican-led investigations and adopting a defensive posture that they say undermines the party’s efforts to go on offense against Trump ahead of the 2026 midterms.

Katu

Elmo's Twitter Account Hacked

Elmo’s X account, beloved by 650,000 followers for its messages of kindness, was hacked on Monday, resulting in a series of racist and antisemitic posts before Sesame Workshop regained control and deleted the offensive content.

Visual Capitalist

Visualized: State Job Changes

From 1998 to 2024, the most common jobs across U.S. states shifted dramatically from retail sales and cashier roles to fast food workers, home health aides, and logistics managers, reflecting changes in the economy, technology, and demographics.

Daily Mail

How the Girl Fell From Disney Cruise

A five-year-old girl fell from the Disney Dream cruise ship after losing her balance sitting on a railing, but her heroic father jumped into the ocean to save her, debunking rumors of negligence and highlighting the crew’s swift rescue efforts.

For the Left

For the Right

The Mingle Project

Michael is on a mission to restore civility and compromise in public discourse. His solution for addressing societal disconnect, The Mingle Project — now on Smerconish.com with exclusive video clips, news articles, author interviews, live event info, and more!

Independent doesn’t mean indecisive.

© 2025 Smerconish. All rights reserved.

© 2025 Smerconish. All rights reserved.

News Links

Contact Links

Balance Delivered Daily

Get the Smerconish.com daily newsletter.

If you can’t find the confirmation email in your inbox, please check your junk or spam folder.

We will NEVER SELL YOUR DATA. By submitting this form, you are consenting to receive marketing emails from: Smerconish.com. You can revoke your consent to receive emails at any time by using the SafeUnsubscribe® link, found at the bottom of every email. Emails are serviced by Mailchimp®

Privacy Policy | Website design by Creative MMS

Privacy Policy | Website design by Creative MMS