Headlines

March 6, 2026

CNBC

Amid escalating tensions with Iran, the stock market showed volatility with energy and defense stocks rising due to oil supply concerns, while airline and travel sectors suffered; investors leaned towards safer assets like gold and bonds, as economists warned of potential economic impacts from prolonged conflict.

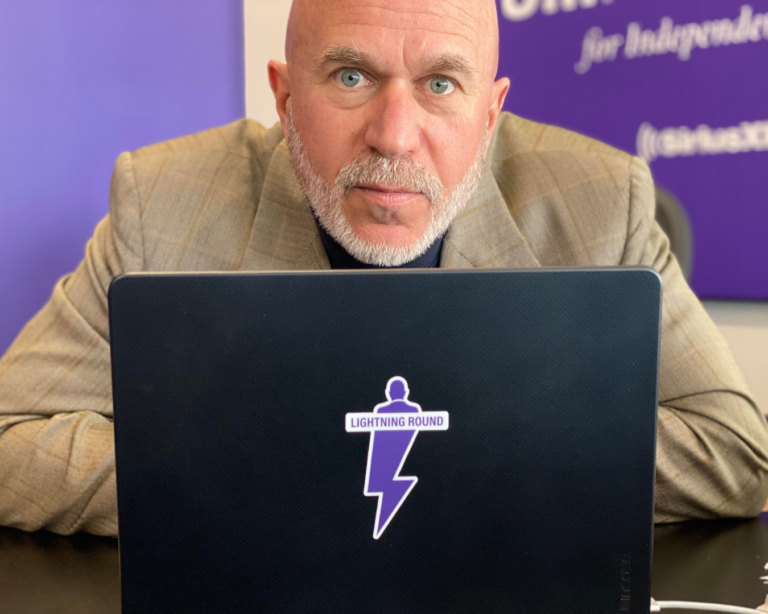

Michael A. Smerconish

Michael's Daily Thoughts: Friday Focus

The President gained television fame by uttering the words “you’re fired” but that’s an expression not heard in Trump 2.0, even now. Notice that Secretary Noem has more been exiled (Special Envoy for the Shield of the Americas) than excommunicated...

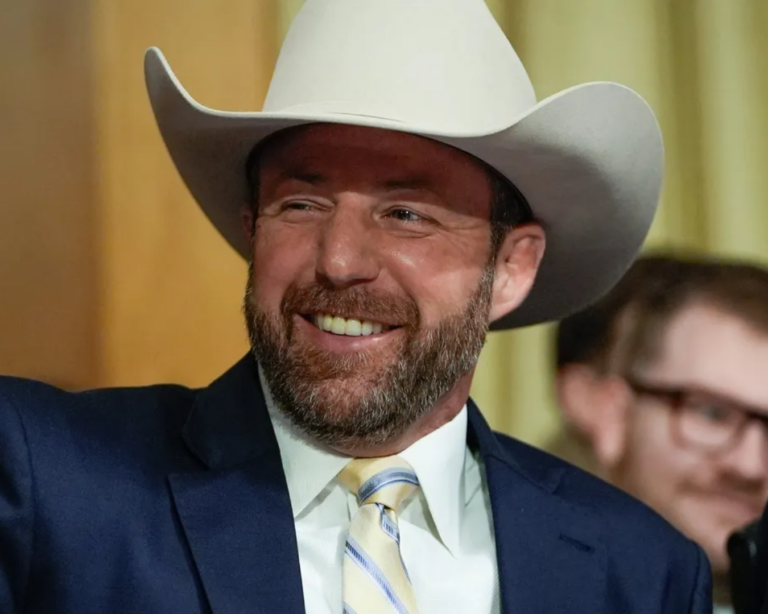

The Oklahoman

Mullin To Replace Noem

President Trump has nominated Oklahoma Sen. Markwayne Mullin to replace Kristi Noem as secretary of the Department of Homeland Security, elevating a close ally who strongly supports the administration’s immigration enforcement agenda while opening a soon-to-be vacant Senate seat in Oklahoma.

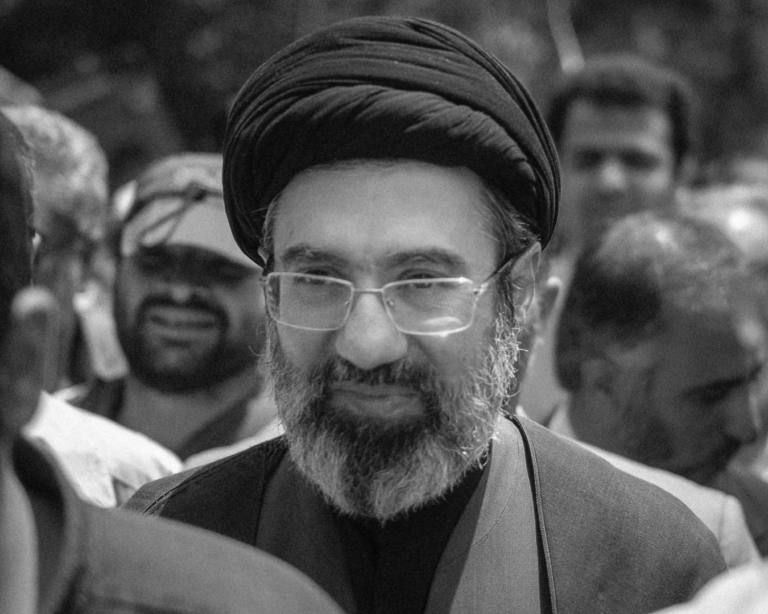

The Express

Iran Cleric Calls for Trump's Blood

Senior Iranian cleric, Iran's new Ayatollah, Abdollah Javadi Amoli called for the “shedding of Donald Trump’s blood” in a televised address as tensions escalate after the United States and Israel launched strikes on Iran’s leadership, missile capabilities and nuclear facilities, sparking a widening regional conflict.

The Atlantic

"The Most Dangerous Man in the World"

A potential successor to his assassinated father, Mojtaba Khamenei is widely viewed by insiders as a hard-line power broker with little religious legitimacy, raising fears that his rise could further destabilize Iran’s already embattled theocratic system.

Axios

Trump Wants Say on Successor

Trump said he must be involved in selecting Iran's Supreme Leader following the death of Ali Khamenei, rejecting the likely succession of his son Mojtaba Khamenei and signaling a potential U.S. role in shaping Iran’s leadership amid the ongoing conflict.

CBS News

Gulf States Low on Interceptors

Arab states in the Persian Gulf are rapidly running out of missile interceptors as Iran continues launching drones and ballistic missiles across the region, prompting urgent requests for faster resupply from the United States.

Times of Israel

Trump on Israeli President: "A Disgrace"

Trump demanded that Israeli President Isaac Herzog immediately pardon Prime Minister Benjamin Netanyahu so he can focus on the war with Iran, calling Herzog a “disgrace” and threatening to refuse a meeting until the pardon is granted.

Associated Press

“Christ Is King” Controversy

The phrase “Christ is king,” long a core Christian declaration of faith, has become increasingly politicized in the U.S., with critics warning it is sometimes used by far-right figures and activists to promote Christian nationalism or antisemitic rhetoric.

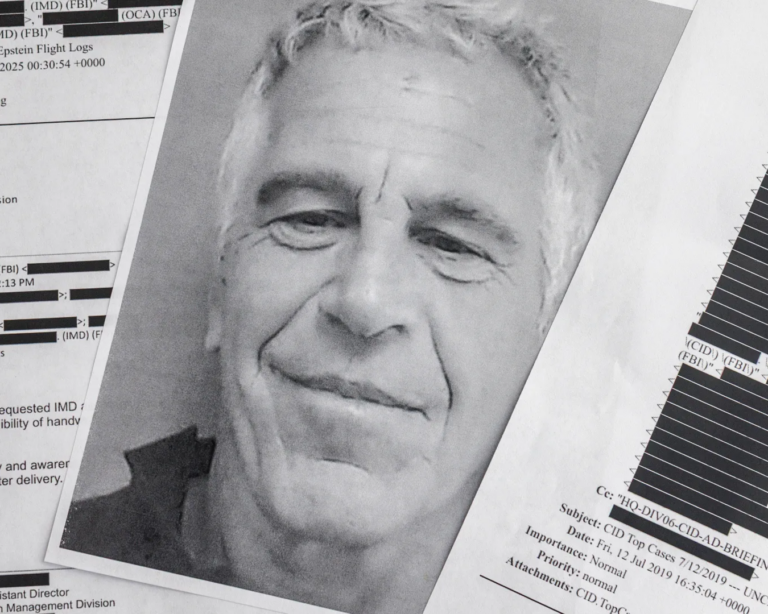

Al Jazeera

War Diverts Epstein Focus

An analyst says the United States- and Israel-led war on Iran has sharply diverted public and political attention away from the explosive Jeffrey Epstein files, with online interest and media focus dropping significantly as the conflict dominates headlines.

The New York Times

Parents of School Shooters Being Charged

As the U.S. struggles to curb mass shootings, prosecutors are increasingly charging parents whose actions or negligence enabled their children to access guns, a strategy supporters say creates accountability but critics warn may overextend criminal liability.

The Wall Street Journal

Rahm Keeps Rolling Out Policies

Rahm Emanuel is sharply criticizing the Democratic Party for focusing too heavily on cultural issues rather than economic concerns as it prepares for the 2026 midterms, while floating ideas that could shape a potential 2028 presidential run.

NBC News

Guthrie Returns to TODAY Set

It was an emotional reunion when Savannah Guthrie briefly visited the TODAY studio to see colleagues as she continues focusing on the search for her missing 84-year-old mother, Nancy Guthrie, whose disappearance in Tucson remains under investigation.

404 Media

Scammers Send ICE Support Notices

Scammers are targeting users of email marketing platforms like Emma with phishing emails claiming a mandatory “Support ICE” donation button will be added to their messages, tricking recipients into logging into fake sites and handing over their credentials.

Politico

Trump to Cuba... You're Next

Trump projected confidence about the ongoing conflict with Iran while suggesting the U.S. could help shape the country’s next leadership, predicting the possible collapse of Cuba’s government, and voicing impatience with Volodymyr Zelenskyy over stalled negotiations.

ABC News

Twins Lesson in Civility

Identical twins Nick Roberts and Nathan Roberts — one a liberal Democrat and the other a MAGA Republican — are modeling rare political civility in Indianapolis by remaining close despite fiercely opposing views and even testifying against each other in state politics.

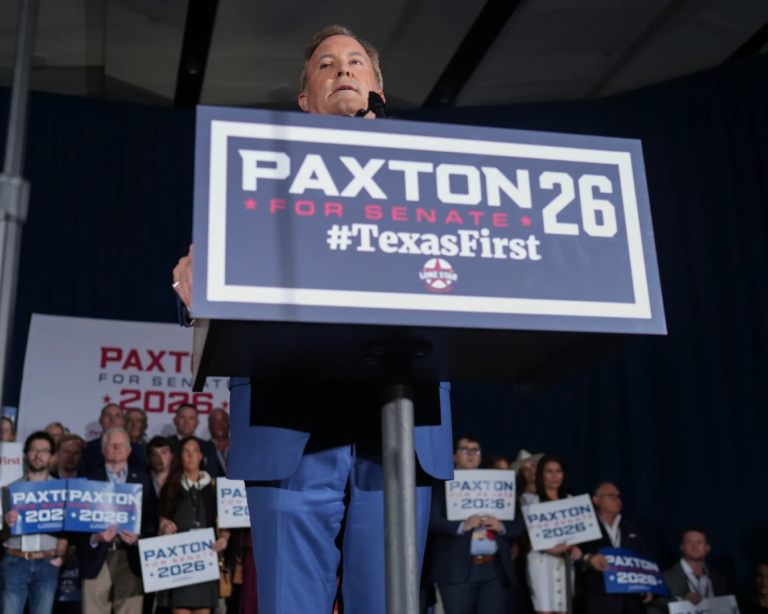

Texas Tribune

Paxton Has Conditions for Exit

Texas AG Ken Paxton said he would consider dropping out of the Republican Senate runoff against John Cornyn if Senate GOP leaders agree to abolish the filibuster and pass the SAVE America Act—a sweeping voter ID measure supported by Donald Trump—using the move to pressure his opponent.

MLive

Michigan Transparency Effort Stalls

A bipartisan effort to expand Freedom of Information Act access in Michigan has stalled in the state House after Speaker Matt Hall said he would not take up the bills, leaving Michigan and Massachusetts as the only states where residents cannot file FOIA requests with the governor’s office or legislature.

Pew Research Center

U.S. Morality Divide

A Pew Research Center survey across 25 countries finds the United States is the only nation where a majority of adults say their fellow citizens have bad morals and ethics, with 53% expressing a negative view shaped partly by partisan divides.

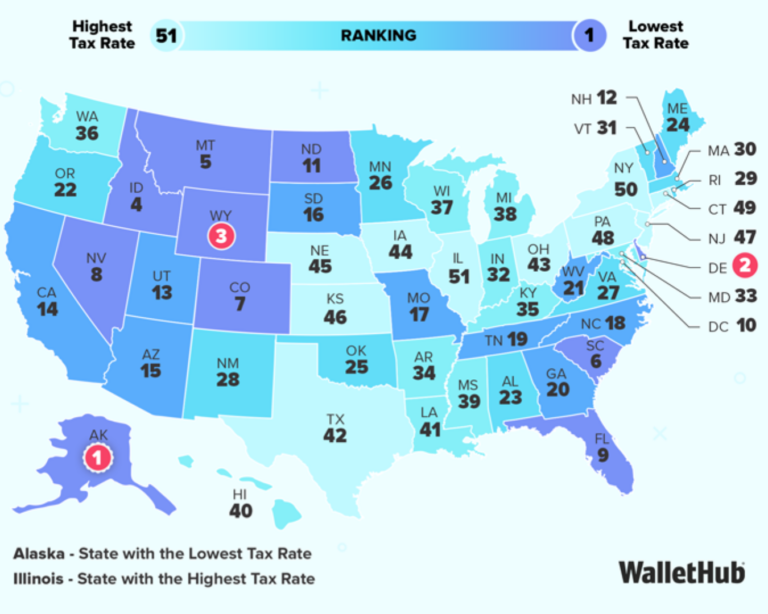

WalletHub

Tax Rates Across US States

A new analysis finds major differences in state and local tax burdens across the U.S., with residents in the highest-tax states paying more than twice as much as those in the lowest-tax states, led by Alaska on the low end and Illinois on the high end.

For the Left

For the Right

Independent doesn’t mean indecisive.